Global Bullies, Climate Models and Dishonesty in Climate Science

May 26, 2012

By Dr. DAVID EVANS | THE REAL AGENDA | MAY 25, 2012

The debate about global warming has reached ridiculous proportions and is full of micro thin half-truths and misunderstandings. I am a scientist who was on the carbon gravy train, understands the evidence, was once an alarmist, but am now a skeptic. Watching this issue unfold has been amusing but, lately, worrying. This issue is tearing society apart, making fools and liars out of our politicians.

Let’s set a few things straight.

The whole idea that carbon dioxide is the main cause of the recent global warming is based on a guess that was proved false by empirical evidence during the 1990s. But the gravy train was too big, with too many jobs, industries, trading profits, political careers, and the possibility of world government and total control riding on the outcome. So rather than admit they were wrong, the governments, and their tame climate scientists, now cheat and lie outrageously to maintain the fiction that carbon dioxide is a dangerous pollutant.

Let’s be perfectly clear. Carbon dioxide is a greenhouse gas, and other things being equal, the more carbon dioxide in the air, the warmer the planet. Every bit of carbon dioxide that we emit warms the planet. But the issue is not whether carbon dioxide warms the planet, but how much.

Most scientists, on both sides, also agree on how much a given increase in the level of carbon dioxide raises the planet’s temperature, if just the extra carbon dioxide is considered. These calculations come from laboratory experiments; the basic physics have been well known for a century.

The disagreement comes about what happens next.

The planet reacts to that extra carbon dioxide, which changes everything. Most critically, the extra warmth causes more water to evaporate from the oceans. But does the water hang around and increase the height of moist air in the atmosphere, or does it simply create more clouds and rain? Back in 1980, when the carbon dioxide theory started, no one knew. The alarmists guessed that it would increase the height of moist air around the planet, which would warm the planet even further, because the moist air is also a greenhouse gas.

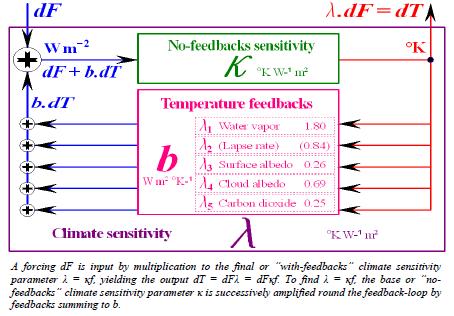

This is the core idea of every official climate model: for each bit of warming due to carbon dioxide, they claim it ends up causing three bits of warming due to the extra moist air. The climate models amplify the carbon dioxide warming by a factor of three – so two thirds of their projected warming is due to extra moist air (and other factors), only one third is due to extra carbon dioxide.

I’ll bet you didn’t know that. Hardly anyone in the public does, but it’s the core of the issue. All the disagreements, lies, and misunderstanding spring from this. The alarmist case is based on this guess about moisture in the atmosphere, and there is simply no evidence for the amplification that is at the core of their alarmism. Which is why the alarmists keep so quiet about it and you’ve never heard of it before. And it tells you what a poor job the media have done in covering this issue.

Weather balloons had been measuring the atmosphere since the 1960s, many thousands of them every year. The climate models all predict that as the planet warms, a hot-spot of moist air will develop over the tropics about 10km up, as the layer of moist air expands upwards into the cool dry air above. During the warming of the late 1970s, 80s, and 90s, the weather balloons found no hot-spot. None at all. Not even a small one. This evidence proves that the climate models are fundamentally flawed, that they greatly overestimate the temperature increases due to carbon dioxide.

This evidence first became clear around the mid 1990s.

At this point official “climate science” stopped being a science. You see, in science empirical evidence always trumps theory, no matter how much you are in love with the theory. If theory and evidence disagree, real scientists scrap the theory. But official climate science ignored the crucial weather balloon evidence, and other subsequent evidence that backs it up, and instead clung to their carbon dioxide theory — that just happens to keep them in well-paying jobs with lavish research grants, and gives great political power to their government masters.

There are now several independent pieces of evidence showing that the earth responds to the warming due to extra carbon dioxide by dampening the warming. Every long-lived natural system behaves this way, counteracting any disturbance, otherwise the system would be unstable. The climate system is no exception, and now we can prove it.

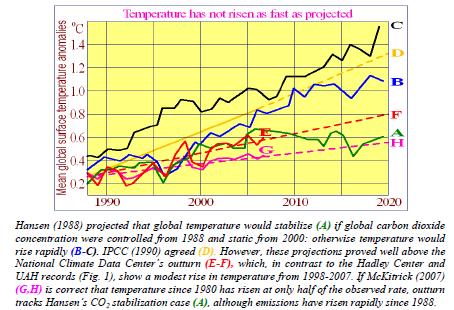

But the alarmists say the exact opposite, that the climate system amplifies any warming due to extra carbon dioxide, and is potentially unstable. Surprise surprise, their predictions of planetary temperature made in 1988 to the US Congress, and again in 1990, 1995, and 2001, have all proved much higher than reality.

They keep lowering the temperature increases they expect, from 0.30C per decade in 1990, to 0.20C per decade in 2001, and now 0.15C per decade – yet they have the gall to tell us “it’s worse than expected”. These people are not scientists. They over-estimate the temperature increases due to carbon dioxide, selectively deny evidence, and now they cheat and lie to conceal the truth.

One way they cheat is in the way they measure temperature.

The official thermometers are often located in the warm exhaust of air conditioning outlets, over hot tarmac at airports where they get blasts of hot air from jet engines, at wastewater plants where they get warmth from decomposing sewage, or in hot cities choked with cars and buildings. Global warming is measured in tenths of a degree, so any extra heating nudge is important. In the US, nearly 90% of official thermometers surveyed by volunteers violate official siting requirements that they not be too close to an artificial heating source. Nearly 90%! The photos of these thermometers are on the Internet; you can get to them via the corruption paper at my site, sciencespeak.com. Look at the photos, and you’ll never trust a government climate scientist again.

They place their thermometers in warm localities, and call the results “global” warming. Anyone can understand that this is cheating. They say that 2010 is the warmest recent year, but it was only the warmest at various airports, selected air conditioners, and certain car parks.

Global temperature is also measured by satellites, which measure nearly the whole planet 24/7 without bias. The satellites say the hottest recent year was 1998, and that since 2001 the global temperature has leveled off.

So it’s a question of trust.

If it really is warming up as the government climate scientists say, why do they present only the surface thermometer results and not mention the satellite results? And why do they put their thermometers near artificial heating sources? This is so obviously a scam now.

So what is really going on with the climate?

The earth has been in a warming trend since the depth of the Little Ice Age around 1680. Human emissions of carbon dioxide were negligible before 1850 and have nearly all come after WWII, so human carbon dioxide cannot possibly have caused the trend. Within the trend, the Pacific Decadal Oscillation causes alternating global warming and cooling for 25 – 30 years at a go in each direction. We have just finished a warming phase, so expect mild global cooling for the next two decades.

We are now at an extraordinary juncture.

Official climate science, which is funded and directed entirely by government, promotes a theory that is based on a guess about moist air that is now a known falsehood. Governments gleefully accept their advice, because the only way to curb emissions are to impose taxes and extend government control over all energy use. And to curb emissions on a world scale might even lead to world government — how exciting for the political class!

A carbon tax?

Even if Australia stopped emitting all carbon dioxide tomorrow, completely shut up shop and went back to the stone age, according to the official government climate models it would be cooler in 2050 by about 0.015 degrees. But their models exaggerate tenfold – in fact our sacrifices would make the planet in 2050 a mere 0.0015 degrees cooler!

Sorry, but you’ve been had.

Finally, to those of you who still believe the planet is in danger from our carbon dioxide emissions: sorry, but you’ve been had. Yes carbon dioxide a cause of global warming, but it’s so minor it’s not worth doing much about.

This article first appeared on JoNova

Dr David Evans consulted full-time for the Australian Greenhouse Office (now the Department of Climate Change) from 1999 to 2005, and part-time 2008 to 2010, modeling Australia’s carbon in plants, debris, mulch, soils, and forestry and agricultural products. Evans is a mathematician and engineer, with six university degrees including a PhD from Stanford University in electrical engineering. The area of human endeavor with the most experience and sophistication in dealing with feedbacks and analyzing complex systems is electrical engineering, and the most crucial and disputed aspects of understanding the climate system are the feedbacks. The evidence supporting the idea that CO2 emissions were the main cause of global warming reversed itself from 1998 to 2006, causing Evans to move from being a warmist to a skeptic.