A special report from Christopher Monckton of Brenchley to all Climate Alarmists, Consensus Theorists and Anthropogenic Global Warming Supporters

Continues from Part 3

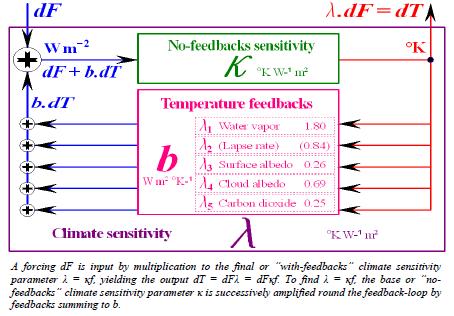

Since the equation [f = (1 – bκ)–1] → ∞ as b → [κ–1 = 3.2 W m–2 K–1], the feedback-sum b cannot exceed 3.2 W m–2 K–1 without inducing a runaway greenhouse effect. Since no such effect has been observed or inferred in more than half a billion years of climate, since the concentration of CO2 in the Cambrian atmosphere approached 20 times today’s concentration, with an inferred mean global surface temperature no more than 7 °K higher than today’s (Figure 7), and since a feedback-induced runaway greenhouse effect would occur even in today’s climate where b >= 3.2 W m–2 K–1 but has not occurred, the IPCC’s high-end estimates of the magnitude of individual temperature feedbacks are very likely to be excessive, implying that its central estimates are also likely to be excessive.

Figure 7

Fluctuating CO2 but stable temperature for 600m years

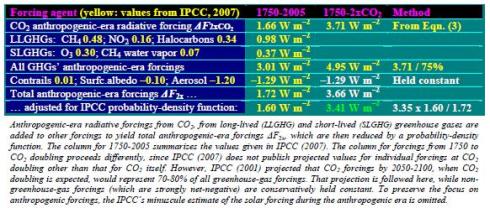

Millions of years before present

Since absence of correlation necessarily implies absence of causation, Figure 7 confirms what the recent temperature record implies: the causative link between changes in CO2 concentration and changes in temperature cannot be as strong as the IPCC has suggested. The implications for climate sensitivity are self-evident. Figure 7 indicates that in the Cambrian era, when CO2 concentration was ~25 times that which prevailed in the IPCC’s reference year of 1750, the temperature was some 8.5 °C higher than it was in 1750. Yet the IPCC’s current central estimate is that a mere doubling of CO2 concentration compared with 1750 would increase temperature by almost 40% of the increase that is thought to have arisen in geological times from a 20-fold increase in CO2 concentration (IPCC, 2007).

How could such over statements of individual feedbacks have arisen? Not only is it impossible to obtain empirical confirmation of the value of any feedback by direct measurement; it is questionable whether the feedback equation presented in Bode (1945) is appropriate to the climate. That equation was intended to model feedbacks in linear electronic circuits: yet many temperature feedbacks – the water vapor and CO2 feedbacks, for instance – are non-linear. Feedbacks, of course, induce non-linearity in linear objects: nevertheless, the Bode equation is valid only for objects whose initial state is linear. The climate is not a linear object: nor are most of the climate-relevant temperature feedbacks linear. The

water-vapor feedback is an interesting instance of the non-linearity of temperature feedbacks. The increase in water-vapor concentration as the space occupied by the atmosphere warms is near exponential; but the forcing effect of the additional water vapor is logarithmic. The IPCC’s use of the Bode equation, even as a simplifying assumption, is accordingly questionable.

IPCC (2001: ch.7) devoted an entire chapter to feedbacks, but without assigning values to each feedback that was mentioned. Nor did the IPCC assign a “Level of Scientific Understanding” to each feedback, as it had to each forcing. In IPCC (2007), the principal climate-relevant feedbacks are quantified for the first time, but, again, no Level of Scientific Understanding” is assigned to them, even though they account for more than twice as much forcing as the greenhouse-gas and other anthropogenic-era forcings to which “Levels of Scientific Understanding” are assigned.

Now that the IPCC has published its estimates of the forcing effects of individual feedbacks for the first time, numerous papers challenging its chosen values have appeared in the peer-reviewed literature. Notable among these are Wentz et al. (2007), who suggest that the IPCC has failed to allow for two thirds of the cooling effect of evaporation in its evaluation of the water vapor-feedback; and Spencer (2007), who points out that the cloud-albedo feedback, regarded by the IPCC as second in magnitude only to the water-vapor feedback, should in fact be negative rather than strongly positive.

It is, therefore, prudent and conservative to restore the values κ ≈ 0.24 and f ≈ 2.08 that are derivable from IPCC (2001), adjusting the values a little to maintain consistency with Eqn. (27). Accordingly, our revised central estimate of the feedback multiplier f is –

f = (1 – bκ)–1 ≈ (1 – 2.16 x 0.242)–1 ≈ 2.095 (29)

Final climate sensitivity

Substituting in Eqn. (1) the revised values derived for the three factors in ΔTλ, our re-evaluated central estimate of climate sensitivity is their product –

ΔTλ = ΔF2x κ f ≈ 1.135 x 0.242 x 2.095 ≈ 0.58 °K (30)

Theoretically, empirically, and in the literature that we have extensively cited, each of the values we have chosen as our central estimate is arguably more justifiable – and is certainly no less justifiable – than the substantially higher value selected by the IPCC. Accordingly, it is very likely that in response to a doubling of pre-industrial carbon dioxide concentration TS will rise not by the 3.26 °K suggested by the IPCC, but by <1 °K.

Discussion

We have set out and then critically examined a detailed account of the IPCC’s method of evaluating climate sensitivity. We have made explicit the identities, interrelations, and values of the key variables, many of which the IPCC does not explicitly describe or quantify. The IPCC’s method does not provide a secure basis for policy-relevant conclusions. We now summarize some of its defects The IPCC’s methodology relies unduly – indeed, almost exclusively – upon numerical analysis, even where the outputs of the models upon which it so heavily relies are manifestly and significantly at variance with theory or observation or both. Modeled projections such as those upon which the IPCC’s entire case rests have long been proven impossible when applied to mathematically-chaotic objects, such as the climate, whose initial state can never be determined to a sufficient precision. For a similar reason, those of the IPCC’s conclusions that are founded on probability distributions in the chaotic climate object are unsafe.

Not one of the key variables necessary to any reliable evaluation of climate sensitivity can be measured empirically. The IPCC’s presentation of its principal conclusions as though they were near-certain is accordingly unjustifiable. We cannot even measure mean global surface temperature anomalies to within a factor of 2; and the IPCC’s reliance upon mean global temperatures, even if they could be correctly evaluated, itself introduces substantial errors in its evaluation of climate sensitivity.

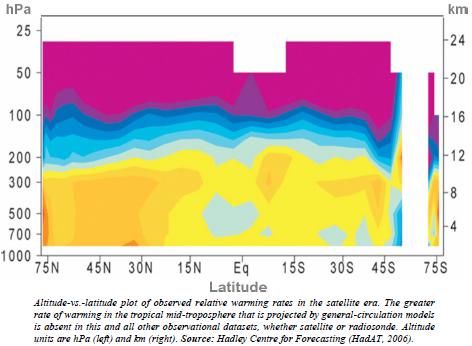

The IPCC overstates the radiative forcing caused by increased CO2 concentration at least threefold because the models upon which it relies have been programmed fundamentally to misunderstand the difference between tropical and extra-tropical climates, and to apply global averages that lead to error. The IPCC overstates the value of the base climate sensitivity parameter for a similar reason. Indeed, its methodology would in effect repeal the fundamental equation of radiative transfer (Eqn. 18), yielding the impossible result that at every level of the atmosphere ever-smaller forcings would induce ever greater temperature increases, even in the absence of any temperature feedbacks.

The IPCC overstates temperature feedbacks to such an extent that the sum of the high-end values that it has now, for the first time, quantified would cross the instability threshold in the Bode feedback equation and induce a runaway greenhouse effect that has not occurred even in geological times despite CO2 concentrations almost 20 times today’s, and temperatures up to 7 ºC higher than today’s. The Bode equation, furthermore, is of questionable utility because it was not designed to model feedbacks in non-linear objects such as the climate. The IPCC’s quantification of temperature

feedbacks is, accordingly, inherently unreliable. It may even be that, as Lindzen (2001) and Spencer (2007) have argued, feedbacks are net-negative, though a more cautious assumption has been made in this paper.

It is of no little significance that the IPCC’s value for the coefficient in the CO2 forcing equation depends on only one paper in the literature; that its values for the feedbacks that it believes account for two-thirds of humankind’s effect on global temperatures are likewise taken from only one paper; and that its implicit value of the crucial parameter κ depends upon only two papers, one of which had been written by a lead author of the chapter in question, and neither of which provides any theoretical or empirical justification for a value as high as that which the IPCC adopted.

The IPCC has not drawn on thousands of published, peer-reviewed papers to support its central estimates for the variables from which climate sensitivity is calculated, but on a handful. On this brief analysis, it seems that no great reliance can be placed upon the IPCC’s central estimates of climate sensitivity, still less on its high-end estimates. The IPCC’s assessments, in their current state, cannot be said to be “policy-relevant”. They provide no justification for taking the very costly and

drastic actions advocated in some circles to mitigate “global warming”, which Eqn. (30) suggests will be small (<1 °C at CO2 doubling), harmless, and beneficial.

Conclusion

Even if global temperature has risen, it has risen in a straight line at a natural 0.5 °C/century for 300 years since the Sun recovered from the Maunder Minimum, long before we could have had any influence (Akasofu, 2008). Even if warming had sped up, now temperature is 7C below most of the past 500m yrs; 5C below all 4 recent inter-glacials; and up to 3C below the Bronze Age, Roman & mediaeval optima (Petit et al., 1999; IPCC, 1990).

Even if today’s warming were unprecedented, the Sun is the probable cause. It was more active in the past 70 years than in the previous 11,400 (Usoskin et al., 2003; Hathaway et al., 2004; IAU, 2004; Solanki et al., 2005). Even if the sun were not to blame, the UN’s climate panel has not shown that humanity is to blame. CO2 occupies only one-ten-thousandth more of the atmosphere today than it did in 1750 (Keeling & Whorf, 2004).

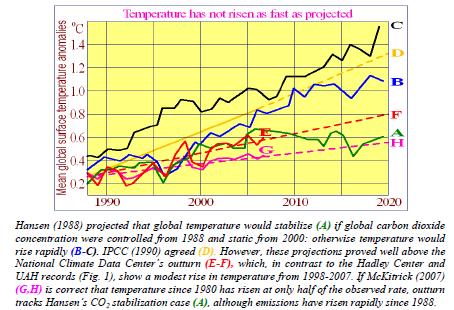

Even if CO2 were to blame, no “runaway greenhouse” catastrophe occurred in the Cambrian era, when there was ~20 times today’s concentration in the air. Temperature was just 7 C warmer than today (IPCC, 2001). Even if CO2 levels had set a record, there has been no warming since 1998. For 7 years, temperatures have fallen. The Jan 2007-Jan 2008 fall was the steepest since 1880 (GISS; Hadley; NCDC; RSS; UAH: all 2008).

Even if the planet were not cooling, the rate of warming is far less than the UN imagines. It would be too small to cause harm. There may well be no new warming until 2015, if then (Keenlyside et al., 2008). Even if warming were harmful, humankind’s effect is minuscule. “The observed changes may be natural” (IPCC, 2001; cf. Chylek et al., 2008; Lindzen, 2007; Spencer, 2007; Wentz et al., 2007; Zichichi, 2007; etc.). Even if our effect were significant, the UN’s projected human fingerprint – tropical mid-troposphere warming at thrice the surface rate – is absent (Douglass et al., 2004, 2007; Lindzen, 2001, 2007; Spencer, 2007).

Even if the human fingerprint were present, climate models cannot predict the future of the complex, chaotic climate unless we know its initial state to an unattainable precision (Lorenz, 1963; Giorgi, 2005; IPCC, 2001). Even if computer models could work, they cannot predict future rates of warming. Temperature response to atmospheric greenhouse-gas enrichment is an input to the computers, not an output from them (Akasofu, 2008). Even if the UN’s imagined high “climate sensitivity” to CO2 were right, disaster would not be likely to follow. The peer-reviewed literature is near-unanimous in not predicting climate catastrophe (Schulte, 2008).

Even if Al Gore were right that harm might occur, “the Armageddon scenario he depicts is not based on any scientific view”. Sea level may rise 1 ft to 2100, not 20 ft (Burton, J., 2007; IPCC, 2007; Moerner, 2004). Even if Armageddon were likely, scientifically-unsound precautions are already starving millions as biofuels, a “crime against humanity”, pre-empt agricultural land, doubling staple cereal prices in a year. (UNFAO, 2008). Even if precautions were not killing the poor, they would work no better than the “precautionary” ban on DDT, which killed 40 million children before the UN at last ended it (Dr. Arata Kochi, UN malaria program, 2006).

Even if precautions might work, the strategic harm done to humanity by killing the world’s poor and destroying the economic prosperity of the West would outweigh any climate benefit (Henderson, 2007; UNFAO, 2008). Even if the climatic benefits of mitigation could outweigh the millions of deaths it is causing, adaptation as and if necessary would be far more cost-effective and less harmful (all economists except Stern, 2006). Even if mitigation were as cost-effective as adaptation, the public sector – which emits twice as much carbon to do a given thing as the private sector – must cut its own size by half before it preaches to us (Friedman, 1993).

In short, we must get the science right, or we shall get the policy wrong. If the concluding equation in this analysis (Eqn. 30) is correct, the IPCC’s estimates of climate sensitivity must have been very much exaggerated. There may, therefore, be a good reason why, contrary to the projections of the models on which the IPCC relies, temperatures have not risen for a decade and have been falling since the phase transition in global temperature trends that occurred in late 2001. Perhaps real-world climate sensitivity is very much below the IPCC’s estimates. Perhaps, therefore, there is no “climate crisis” at all. At present, then, in policy terms there is no case for doing anything. The correct policy approach to a non-problem

is to have the courage to do nothing.

For a copy of this report on PDF format and a full list of references, please click here.